Replicate.com Review: Transcribing 100+ YouTube Videos for $9 using Cloud GPUs

I put Replicate.com to the test in order to perform speech-to-text on 140 YouTube videos. The final word count came to 530,524 words.

YouTube’s Data API provides transcripts but they do not contain punctuation. Being able to have sentence boundaries is very important for my upcoming project.

Cloud GPUs to the Rescue

Startups and individuals looking to get into the AI hype are facing costs of tens of thousands of dollars if they want their own hardware.

Top NVIDIA GPUs, such as the H100, are going for $40,000. Meta plans to spend $18 billion on NVIDIA GPUs by the end of the year.

For indie hackers, it is important to move fast to validate ideas, determine technical feasibility, and create working proof of concepts.

That’s where serverless and Infrastucture-as-a-Service can really help. I decided to try Replicate.

As a side note, cloud vendor lock-in can become a problem for startups after they become successful. Cloud providers have expensive data egress fees to move data out if you decide to switch. Read more about the costs of moving 50 TB between different cloud providers here.

What is Replicate.com?

Replicate does a good job of describing what they do.

“Replicate lets you run machine learning models with a cloud API, without having to understand the intricacies of machine learning or manage your own infrastructure. You can run open-source models that other people have published, or package and publish your own models. Those models can be public or private.” Replicate.com

So what exactly is a model?

“At Replicate, when we say “model” we’re generally referring to a trained, packaged, and published software program that accepts inputs and returns outputs.”

My Experience

My goal was to transcribe 140 YouTube videos. I wanted to use OpenAI Whisper or insanely-fast-whisper for speech recognition but I needed a cloud GPU to do it.

As I looked around Replicate.com, I found a model already setup that uses both insanely-fast-whisper and yt-dlp to download a YouTube video before transcribing it.

With a click of a button, I cloned the existing model to create my own private deployment. I configured the deployment to use NVIDIA A40 GPU at a price of $0.000725/sec or $2.61/hour. I set the number of instances to 10 so that I could process all 140 videos in a reasonable amount of time.

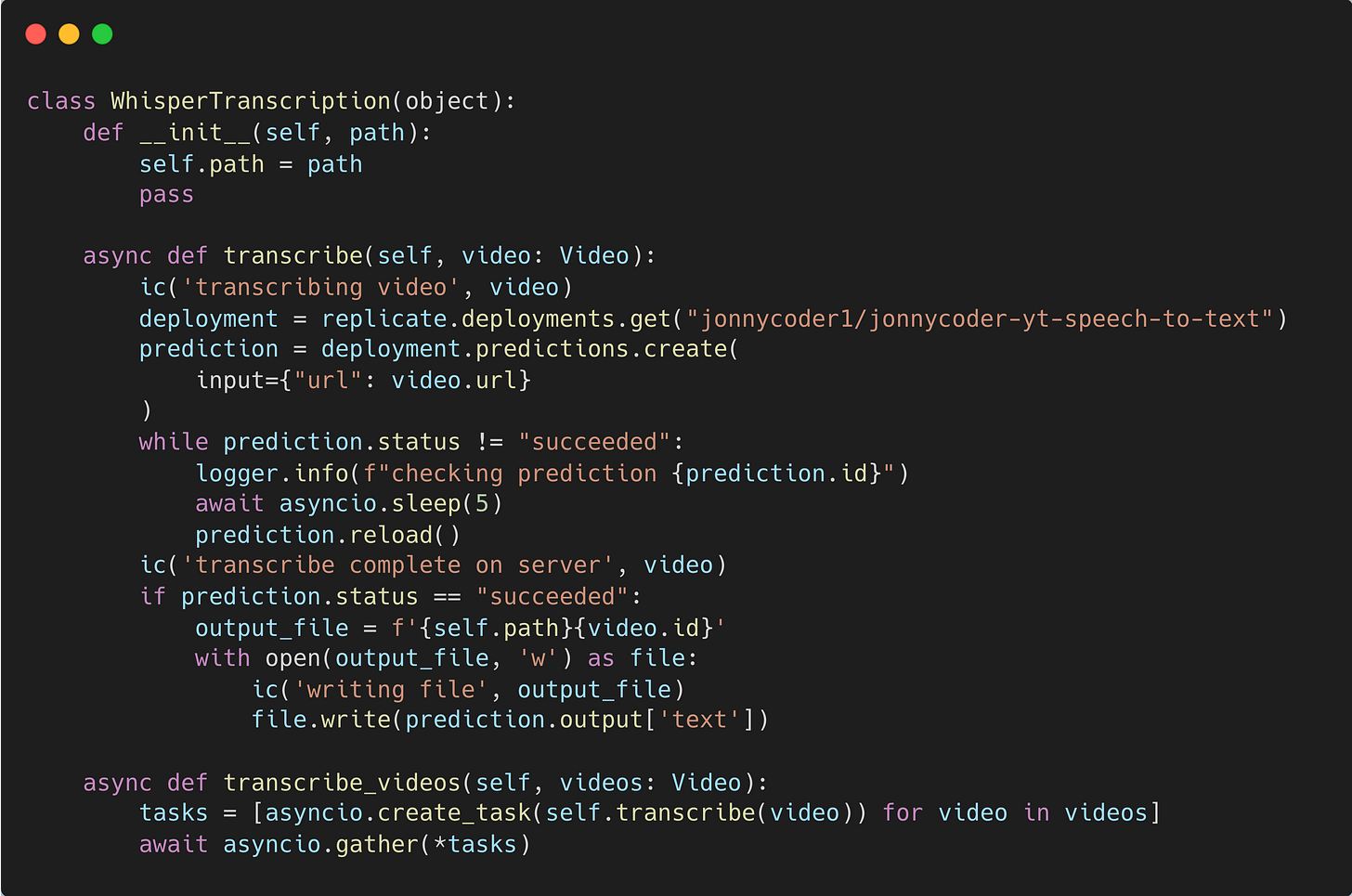

I wrote a Python class, that integrates with my Replicate deployment, in order to transcribe a list of YouTube video URLs.

I enabled my deployment and started running my script. Here is a view of the dashboard for my deployment while it was running:

And here is a screenshot of the billing shortly after I was finished:

Important Note: If your deployment is active and has a minimum instance of 1 or more, you will be charged even for idle time. Be sure to disable your deployment or set minimum instances to 0.

I found that my particular model took 4+ minutes to start up, which explains why 28% of my bill falls under boot time.

Processing all videos completed in about 20 minutes.

I’m quite positive that the slow boot time is related to the dependencies within the model I cloned, which is made up of a cog.

A cog is an open source tool, created by Replicate, to generate a Docker image with best practices around containerization and dependency management between machine learning libraries in Python, CUDA, PyTorch, Tensorflow, etc.

When testing out other models on Replicate, such as text-to-image models, I found the boot time to be very short.

What I Liked About Replicate

Replicate is more than just a cloud compute service like AWS. I found the biggest feature to be how easy it was to use.

Here are some features that stood out:

An exchange of public models ready to deploy and run immediately in private. I quickly found an existing model that I didn’t have to develop myself

An API with client libraries for major programming languages. I called the deployment API using Python

Can scale 0 to many instances, and monitor my billing usage in near realtime

A dashboard view of all my running instances for each model

The results of my model runs were also saved for 1 hour on my Replicate web dashboard

Next Steps & Alternatives

I personally believe that the price I paid was high, but there is room for optimization.

For small projects, using Google Colab is free and provides T4 GPU instance types. I was able to manually convert my Replicate cog model to a Google Colab notebook and run it successfully. It was only later that I re-read the README file for insanely-fast-whisper and noticed that they already provide a Google Colab notebook.

Hugging Face is well known as being the primary machine learning hub, and they also provide cloud compute via inference endpoints. Their pricing is similar to Replicate.com.

Below are two other alternative cloud GPU providers that look exciting.

The first is Modal.com which enables users to write Python code and execute it remotely in the cloud.

The second is Runpod.io which provides pay-per-second serverless GPU computing with autoscaling.

Experiment and see what’s right you!

If you liked this article, subscribe below to get emailed when new articles are published.